How Emerging Technologies Are Shaping the Future of Event Video Production in 2026

If you've been to a corporate conference, music festival, or product launch lately you've probably felt something different in the air. The cameras are smarter. The live streams feel cinematic. The gap between "being there" and "watching remotely" has nearly vanished.

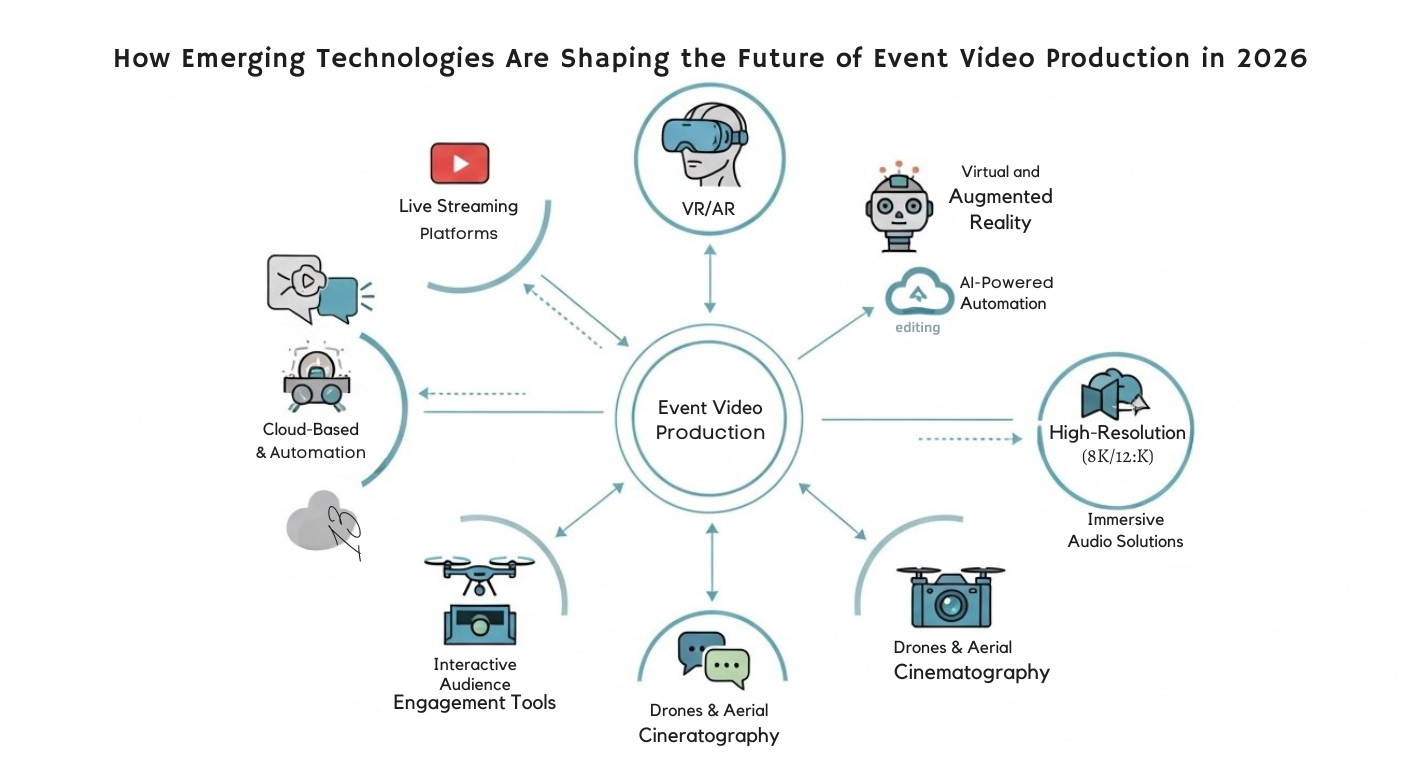

Emerging technologies are completely reshaping how events get filmed, produced, and delivered to audiences around the globe. Event video production in 2026 looks nothing like it did even three years ago.

Whether you're an event planner, a video producer, or a brand wanting your next event to truly stand out understanding these shifts is no longer optional.

AI-Power Editing Is Replacing Hours of Manual Work

Artificial intelligence isn't a buzzword anymore. It's the backbone of modern video production.

We're not talking about simple auto-cropping. Today's AI systems watch a live event through multiple cameras, identify the most powerful moments, and cut together polished highlight reels all while the event is still happening.

Think about what that means.

A few years ago, production teams would shoot an event, haul footage back to an editing suite, and spend weeks making it shareable. Now? AI delivers broadcast-quality clips to social media within minutes.

The numbers back this up. The AI video analytics market is expected to grow from about $32 billion in 2025 to over $133 billion by 2030.

Here's what AI handles in event video production right now:

- Syncing audio across multiple camera feeds automatically

- Color matching between different cameras in real time

- Generating captions in 30+ languages instantly

- Tagging and indexing footage by keywords, speakers, and topics

- Detecting key moments like applause, standing ovations, and product reveals

- Creating platform-specific edits for social media, websites, and email campaigns

All of this frees human editors to focus on what machines still can't do storytelling, creative judgment, and emotional connection.

Virtual Production and LED Walls Have Left Hollywood Behind

LED volume stages used to be reserved for blockbuster movies. Not anymore.

Virtual production technology is now accessible for corporate events, brand activations, and mid-sized conferences. The concept is simple but powerful.

Instead of renting expensive venues or flying speakers across the world, production teams place presenters in front of massive LED walls. These walls display photorealistic environments rendered in real time.

A CEO can deliver a keynote that looks like it's set in a futuristic city. A product launch can happen on a tropical beach. All without anyone leaving the studio.

Here's why event producers love it:

- Travel costs drop significantly

- Carbon footprints shrink

- Setup times go from days to minutes

- Environments change instantly between sessions

- Volumetric capture lets viewers explore scenes in 360 degrees

For clients who want cinematic quality without Hollywood budgets this is where the industry is heading.

Spatial Computing and Mixed Reality Are Rewriting the Audience Experience

Apple Vision Pro and similar devices have pushed spatial computing from science fiction into everyday event production.

This technology changes something fundamental what it actually means to "attend" an event.

Picture a product launch. Instead of watching a flat video, remote attendees examine a 3D model from every angle. They walk around it virtually. They interact with its features. That's already happening in 2026.

Here's how spatial computing is being used:

- Spatial keynotes where presenters bring 3D content to life in real space

- Virtual venue walkthroughs using platforms like Matterport

- 3D floor plan collaboration that catches layout problems early

- Immersive product demos letting remote audiences interact with objects

- Mixed-reality experiences that blend physical stages with digital overlays

The old divide between "being there" and "watching a screen" is disappearing fast.

5G and Cloud-Based Workflows Have Eliminated the Bottleneck

Anyone who's tried live streaming from a convention center with spotty Wi-Fi knows the pain. Buffering. Dropped frames. Audio nightmares.

Those days are mostly over.

5G provides the bandwidth for high-resolution, multi-camera live streams with minimal delay. Adaptive bitrate streaming adjusts quality automatically based on each viewer's connection.

Cloud-based post-production has also become standard. Here's what that looks like in practice:

- Editors, producers, and clients collaborate in real time from anywhere

- Cuts get reviewed, notes get added, and approvals happen instantly

- No more waiting for one editor to process everything locally

- Projects that took weeks now get turned around in days sometimes hours

Behind the scenes, dedicated AV-optimized networks are now a core part of event planning too. These support:

- AV-over-IP streaming

- Live show control

- Real-time monitoring

- Simultaneous multi-platform broadcasting

All running without interfering with each other. That's a massive upgrade from even two years ago.

Intelligent Lighting and Projection Mapping Are Transforming Visuals

This area is evolving fast but rarely gets the attention it deserves.

Intelligent lighting systems now adapt automatically to what's happening on stage. They respond to speaker movements. They shift with content changes. Some even react to audience energy levels.

The lights aren't just illuminating the room anymore. They're telling the story.

Projection mapping pushes this even further. Ordinary surfaces walls, stages, ceilings, even 3D objects become dynamic, immersive displays.

Real-world applications include:

- Concert stages synchronizing visuals with music in real time

- Corporate events where products appear to emerge from walls

- Weddings using animated backdrops and interactive dance floors

- Trade show booths transformed into fully immersive brand experiences

- Conference stages with environments that shift per session theme

For video production specifically, intelligent lighting improves camera performance dramatically. When lighting is designed for both the live audience and the cameras at the same time, footage comes out far more polished without heavy color correction later.

How 2026 Event Video Production Compares to Traditional Methods

Here's a clear side-by-side look at how much has changed:

| Production Element | Traditional Approach (Pre-2024) | 2026 Emerging Tech Approach |

|---|---|---|

| Live Editing | Manual switching by a human director | AI-assisted switching with highlight detection |

| Post-Production | 2–6 weeks for deliverables | Same-day highlights; full edits in days |

| Remote Audiences | Basic Zoom or webinar streams | Immersive multi-angle streams with interactivity |

| Set Design | Physical sets needing days of construction | LED virtual stages changed in minutes |

| Captioning | Manual transcription and translators | Real-time AI captioning in 30+ languages |

| Engagement | Static polls, post-event surveys | AR overlays, gamification, live dashboards |

| Cost Structure | High fixed costs (travel, crew, sets) | Lower variable costs with AI and remote tools |

| Repurposing | Manual re-editing for each platform | AI-generated platform-specific versions |

| Lighting | Static preset plots | Intelligent systems adapting in real time |

| Security | Physical badge checks | Facial recognition integrated with AV |

| Pre-Production | 2D plans and site visits | Digital twin simulations and 3D walkthroughs |

Interactive and Shoppable Event Video Is Driving Revenue

Audiences in 2026 aren't just watching event videos. They're clicking, shopping, and buying inside them.

Clickable hotspots, embedded product tags, and in-video checkout options let viewers go from interest to purchase without ever leaving the video player.

Imagine a live streamed fashion event where viewers click on any outfit and buy it instantly. Or a tech keynote where attendees pre-order the product being unveiled right from the video stream.

The video itself becomes the storefront.

New monetization strategies are also taking off:

- Premium content tiers with exclusive behind-the-scenes access

- Virtual VIP experiences offering unique camera angles

- On-demand access passes that extend event revenue beyond the live date

- Sponsored interactive overlays within live streams

- Tiered ticket pricing based on level of video interactivity

These approaches are turning event video from a cost center into a genuine revenue stream.

Hyper-Personalized Video Content Is Becoming the Standard

One-size-fits-all event recaps? They're quickly becoming outdated.

AI now lets producers create multiple versions of event content tailored to different audience segments automatically from the same source footage.

Here's a quick example. A healthcare company hosts a conference. Doctors get a highlight reel focused on clinical sessions. Hospital administrators get a version about operational insights. Marketing teams get a cut emphasizing brand messaging.

All generated automatically. All from the same raw footage.

AI personalization goes even deeper than content selection:

- Pacing and energy levels adjusted per audience demographic

- Graphics and branding customized for different regions or job roles

- Voiceover style and tone matched to viewer preferences

- Background music shifted to suit professional versus casual audiences

- Video length optimized per platform (short for social, long for on-demand)

The result? Higher engagement. Longer watch times. Much better post-event follow-through.

Real-Time Data and Emotion Analytics Are Shaping

This one surprises most people.

Some of the most innovative production teams in 2026 use real-time audience analytics to make decisions on the fly during live events.

Sensors, cameras, and software now measure engagement levels, attention patterns, and emotional responses all while the event is happening.

Here's how that plays out:

- A speaker starts losing the room the production team sees it immediately

- They cut to a different camera angle or trigger a visual cue on screen

- Segment length gets adjusted in real time based on audience energy

- Post-production editors use the data to find the true highlight moments

- Final recap videos reflect what actually resonated, not editorial guesswork

It's a feedback loop that simply didn't exist before. The audience's reaction informs the production in real time creating a more dynamic experience for everyone.

Autonomous Drone and Robotic Camera Systems Are Changing the Shot

Here's something almost nobody in the event production world is talking about yet.

Traditional event cinematography relies on fixed cameras and handheld operators. That limits what you can capture and means you need a large crew for full coverage.

AI-powered drones and robotic camera systems are changing that equation entirely.

What these systems bring to the table:

- Indoor-rated drones with obstacle avoidance and quiet motors

- Sweeping aerial shots of conference halls and exhibition floors without disturbing anyone

- Pre-programmed flight paths or real-time AI tracking of speakers on stage

- Robotic gimbal systems on tracks and rails reaching spots humans physically can't

- Consistent, repeatable cinematic movements without fatigue or missed cues

- Direct integration with AI editing tools for automated highlight assembly

These systems don't get tired. They don't miss cues. And they execute complex camera movements with pinpoint precision every single time.

For large-scale trade shows, outdoor festivals, and multi-venue conferences, autonomous cameras are quickly becoming a practical necessity.

Digital Twins Are Revolutionizing Event Pre-Production Planning

This is the second technology flying under the radar and it's a big one.

A digital twin is a detailed virtual replica of a physical event venue. Think of it as a perfect 3D copy you can explore from your desk.

Traditional pre-production involved site visits, 2D floor plans, and lots of educated guessing. Where do the cameras go? Will that lighting rig block the projector? Can the back row see the screen?

Those questions were usually answered during load-in. Which meant expensive last-minute changes.

Digital twins flip that process entirely. Using LIDAR scanning, photogrammetry, and 3D modeling, teams build a precise virtual replica before ever stepping inside.

Here's what becomes possible:

- Test camera placements and angles in the virtual environment

- Preview lighting setups and see exactly how they'll look on camera

- Drop in stage designs and adjust them until they're perfect

- Check audience sightlines from every single seat

- Coordinate with remote team members who "walk through" the space virtually

- Run full production rehearsals without booking the physical venue

Teams that used to spend two or three days on-site for planning? They now handle it in an afternoon from their offices. And because everything's been tested virtually, load-in days run smoother with far fewer surprises.

Sustainability Has Become a Production Priority

This isn't just a feel-good trend. It's a business requirement.

Remote production, virtual sets, AI efficiency, and cloud workflows all shrink the carbon footprint dramatically compared to traditional methods.

No flying a 20-person crew. No shipping tons of set pieces. No powering energy-hungry on-site editing suites.

Many corporate clients now require sustainability reporting from production partners. Here's how the industry is responding:

- Energy-efficient LED walls replacing power-hungry projection systems

- Reusable scenic elements designed for multiple events

- Digital-first content delivery eliminating printed materials

- Optimized freight logistics reducing transportation waste

- Cloud rendering replacing local hardware setups

High production value and environmental responsibility aren't at odds anymore. In 2026, they go hand in hand.

Facial Recognition and Smart Security Are Merging With AV

Security at events used to be completely separate from video production. Not anymore.

Facial recognition integrated with AV and access control now serves a dual purpose. It speeds up attendee entry. And it provides real-time security monitoring.

Here's how the merger works in practice:

- Cameras already capturing broadcast footage also verify attendee identities

- Crowd flow gets tracked automatically throughout the venue

- Security teams receive real-time alerts if access rules are breached

- Unauthorized access to backstage or VIP areas gets flagged instantly

- Separate security camera systems become unnecessary

Transparency is essential. Attendees need to know when facial recognition is active. And organizers need clear data handling policies.

But when implemented thoughtfully, this technology improves both safety and production efficiency in meaningful ways.

What Event Producers Need to Do Right Now

Standing still isn't an option in 2026. The tools are here. They're affordable. And they deliver better results, faster, at lower cost.

Here's a quick action checklist for event producers:

- Evaluate where AI can take over repetitive editing and production tasks

- Explore virtual production options even on a small scale for your next event

- Upgrade streaming infrastructure to support interactive, high-quality experiences

- Build a digital twin of your next venue before pre-production begins

- Invest in AV-optimized network infrastructure from the start

- Start tracking real-time audience analytics during live events

- Prioritize sustainability in every production decision

And above all remember that technology should enhance human creativity, not replace it.

The best event videos in 2026 still tell compelling stories. The tech just makes it possible to tell them faster, to more people, in more powerful ways.

FAQs

How is AI being used in event video production in 2026?

AI manages multi-camera switching, automates color correction, generates real-time highlight reels, creates platform-specific edits, and handles transcription and multilingual captioning. It takes over the repetitive work so human editors can focus on creative storytelling.

What is virtual production for events?

Virtual production uses LED walls and real-time rendering to create photorealistic digital environments. Speakers present in front of any backdrop without leaving a studio. It eliminates physical set construction and travel costs while delivering cinematic-quality results.

How has 5G changed live event streaming?

5G delivers higher bandwidth and lower latency. This enables multi-camera live streams in high resolution with minimal buffering even in packed venues. It also supports real-time polling, Q&A, and multi-angle viewing options.

What is a digital twin in event planning?

A digital twin is a precise 3D virtual replica of an event venue. Production teams use it to test camera placements, preview lighting, plan staging, and check sightlines all without visiting the physical location. It saves time, cuts costs, and leads to smoother productions.

Can small businesses afford these technologies?

Yes. Cloud-based tools, AI editing software, and scalable streaming platforms have dramatically lowered costs. Many tools run on affordable monthly subscriptions. Virtual production studios are available for rental too. A Hollywood budget is no longer necessary.

How do autonomous drones work at events?

Indoor-rated drones with quiet motors and obstacle avoidance capture aerial footage without disturbing attendees. They follow AI commands or pre-programmed paths to track speakers and crowds. Combined with robotic camera systems, they deliver cinematic coverage with smaller crews.

What role does spatial computing play?

Spatial computing through mixed-reality headsets lets attendees experience events in immersive 3D. Remote viewers interact with virtual objects and explore spaces in ways flat video can't offer. It's especially powerful for product launches and trade shows.

How do real-time analytics help live event production?

Sensors and AI measure audience engagement and emotional response during live events. Directors use this data to adjust camera angles, pacing, and content on the fly. Post-production teams use it to identify the moments that truly connected with audiences.

Final Thought

Event video production in 2026 is moving at a pace that would've been hard to imagine just a few years back. AI, spatial computing, autonomous cameras, digital twins, and immersive technologies aren't extras anymore they're becoming the foundation of how great events get captured and shared with the world. But here's the thing that hasn't changed: the producers and brands who win are the ones who use these tools to serve the story, not the other way around. Technology opens doors. Creativity is what walks through them.